How Disk Access Path Has Evolved In The Last Decade

Audio : Listen to This Blog.

Abstract

This blog article discusses the evolution of disk access path from bygone years to currently trending Non Volatile Memory Express (NVMe). All engineers should be well aware of the steep latency increase from a few nanoseconds for internal cache hits to a few hundred nanoseconds for RAM, and eventually all the way up to a few hundred milliseconds to seconds for mechanical hard disk access. Latency of external disk access was a severe bottleneck that limited eventual performance, until recently.

With the advent of solid state memory architectures like NAND/NOR Flash, the access times and power requirement were dramatically cut down. This brought even storage to the well-known Moore’s performance curve. Newer SSD hard disks were made that replaced the storage media from traditional, mechanical, rotational magnetic media to solid state memories but kept the disk access protocol the same for backward compatibility.

Soon the realization dawned that with solid state storage media, the bottleneck still existed with these traditional disk access protocols.

In this blog article, let us see how computer designs and disk access protocols have evolved over the years to give us today’s high bandwidth, low-latency disk IO path, called NVMe.

Evolution of computer design to achieve current high performance, low-latency disk access

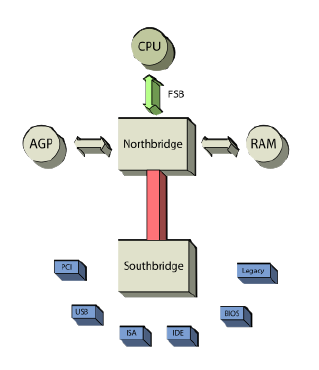

Let us rollback a decade earlier and look at how computers were designed. A computer would contain a CPU with two external chipsets, Northbridge and Southbridge. Please see Figure 1 for such a design.

A Northbridge chipset, also called a Memory controller Hub (MCH), provides high-speed access to external memory and graphics controller, directly connecting to the CPU. And then the Southbridge, also called an IO hub, would connect all the low speed IO peripherals. It was a given that spinning hard disks are low-performance components and connected to Southbridge.

Figure 1: Computer design with Northbridge/Southbridge chipsets

Figure 1: Computer design with Northbridge/Southbridge chipsets

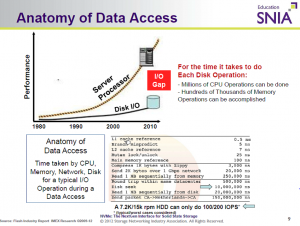

Figure 2: Anatomy of Disk Access – source: SNIA

Figure 2: Anatomy of Disk Access – source: SNIA

But with each generation of CPU, faster processors appeared resulting in any external data access out of the CPU, to severely affect its performance because of ever-increasing IO delay. Larger caches helped to an extent, but it soon became obvious that spinning CPU to higher speeds every generation will definitely not get the best performance, unless the external disk delay path scales in comparison to the CPU performance. The wide gap between processor performance and disk performance is captured in Fig 2.

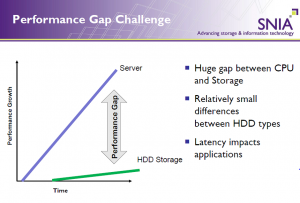

As the first step towards addressing the high latency for external storage access, memory controller got integrated into the CPU directly; in other words, the Northbridge chipset got subsumed totally within the CPU. So, there was one fewer bridge for IO access to external disks. But still, hard disk access latency did really hurt the overall performance of the CPU. The capacity scale and data persistence with hard disks cannot be achieved just with RAMs, and so they were critical components that cannot be ignored. Figure 3 essentially captures this performance gap.

Figure 3: Disk Access Performance Gap – Source: SNIA

Figure 3: Disk Access Performance Gap – Source: SNIA

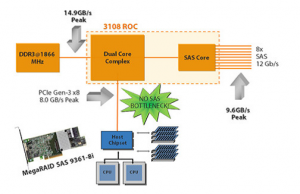

Figure 4: Typical SAS drive access path

Figure 4: Typical SAS drive access path

The computer industry did have another significant evolution in embracing serial protocols again for high speed interfaces. Storage access protocols changed serial (e.g., SAS), and computer buses followed suit (e.g., PCI Express).

AHCI protocol standardized ATA disk access, SAS/FC drives took over SCSI, and serial protocol began to dominate. Each of these protocols had higher speeds and other networked storage features, but the drives were still mechanical. All of these storage protocols needed a dedicated Host Bus Controller (HBA) connected to the CPU local bus that translated requests from/to local CPU (over PCI/PCI-X/PCIe) to/from storage protocol (SAS/SATA/FC). As one could see in the Figure 4, a SAS disk drive could be reached only through a dedicated HBA (host bus adapters).

Computer local buses, not to be left behind, followed serializing and came out with PCI Express. PCI Express protocol came into its own; although physically they are different from earlier parallel bus PCI/PCI-X based designs, software interfaces remained the same. Southbridge chipsets carried PCI Express, and there was mass adoption to PCI Express with added performance benefits. The high point of PCI Express was its integration directly into the CPU, thus totally avoiding any external bridge chipset for interfacing to hard disks. With PCI Express becoming the de facto high-speed peripheral interface directly out of the CPU, bandwidth and performance of external peripheral components could be scaled to match CPU directly.

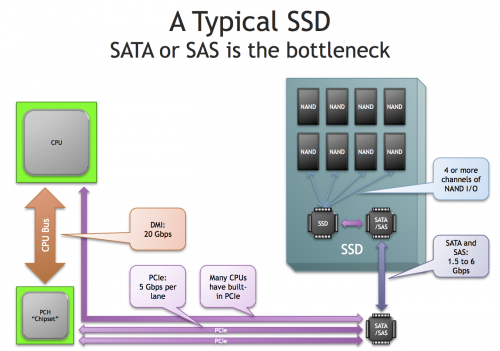

Another significant technology improvement delivered solid-state disks. Initial designs only tried to create a niche market for solid state drives. Backward compatibility of new SSDs was an absolute requirement making these SSDs carry the same disk access protocols as traditional hard disks, SAS/SATA, for instance. Initial SSD disks were expensive, with capacities limited to really challenge traditional hard disks. But with each generation, capacity and life (durability) were addressed. It became evident that solid-state disks were here to stay. Figure 5 captures a typical SSD with legacy disk access protocols like SAS/SATA. Now, the storage media became solid state and were no longer mechanical; hence, power requirements and latency got dramatically reduced. But inefficiencies that existed in the disk access protocol got exposed.

Figure 5: Legacy IO Path with Flash

Figure 5: Legacy IO Path with Flash

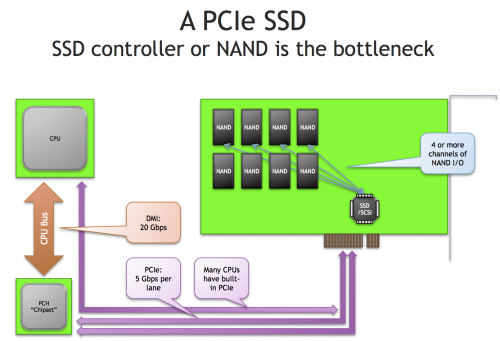

Figure 6: PCIe SSD through IOH

Figure 6: PCIe SSD through IOH

Let us pause a moment here to understand the inefficiencies that were mentioned. If the CPU were to perform a disk access, then driver software in the CPU submits requests to the device over PCIe. The requests are carried over PCIe as payloads and reach the HBA, which decodes the payloads and prepares the same request. Only this time, the request is signaled through another serial storage protocol (e.g., SAS). Eventually the request reaches the disk controller, which performs the operation on the storage media and responds. This response, now initiated in a storage protocol, is received by the HBA, which is again converted to PCIe to hand over the response to the CPU. The role of an HBA was seriously questioned in the whole topology.

The full potential of the solid-state disks have yet not been realized because of limitations discussed earlier. Industry responded, removing all the intervening protocol conversions, by avoiding the HBA and legacy disk access protocols, but directly interfacing them over PCIe using proprietary protocols. Refer to Figure 6. Fusion IO PCIe SSD drives were one such successful product that changed the performance profile of disks forever.

Finally, everyone could sense the unlimited performance available to the CPU, with solid state Flash storage on Moore’s curve, and the disk IO performance in microseconds from traditional milliseconds to seconds range. This was a moment of reckoning, and standardization had to happen for it to be in the mainstream. Thus was born NVMe. NVMe did have competitors initially through SCSI Express and SATA Express provided backward compatibility to existing AHCI based SATA disks. But NVMe did not have to carry any old baggage, its software stack is lean (though the software stack had to be written from scratch), it became abundantly clear that its advantages far outweighed the additional effort involved. And thus, the CPU vs disk performance curve, which was ever diverging, has been tamed for now. But we can rest assured and look forward to several other significant innovations in storage, networking and processor design to try taming the disk access latency beast completely.

References:

[1] Southbridge computing, https://en.wikipedia.org/wiki/Southbridge_(computing)

[2] Northbridge computing,https://en.wikipedia.org/wiki/Northbridge_(computing)

[3] Flash-Plan for the disruption, SNIA – Advancing Storage and Information

Technology

[4] A high performance driver ecosystem for NVM Express

[5] NVM Express-Delivering Breakthrough PCIe SSD performance and scalabil-

ity, Storage Developer Conference, SNIA 2012.

[6] Stephen, Why Are PCIe SSDs So Fast?, http://blog.fosketts.net/2013/06/12/pcie-ssds-fast/