Immunize Customer Experience With These Cloud Storage Security Practices

Audio : Listen to This Blog.

Cloud Storage, a Great Choice

A 21st-century industry looking for uncompromising scalability and performance cannot possibly come across Cloud Storage and say, “I’ll pass.” Be it fintech or healthcare, small-sized customers, or multi-national clients; cloud storage is there to store and protect all business sensitive data for all business use cases. While modern services like smart data lakes, automated data backup and restore, mobility, and IoT revamp the customer experience, cloud storage would ensure impeccable infrastructure for data configuration, management, and durability. Any enterprise working with cloud storage is guaranteed to enjoy:

- Optimized Storage Costs

- Minimized Operational Overhead

- Continuous Monitoring

- Latency-Based Data Tiering

- Automated Data Backup, Archival & Restore

- Throughput Intensive Storage And

- Smart Workload Management

However, such benefits come with a pre-requisite priority for the security of the cloud storage infrastructure. The data center and the network it operates in need to be highly secured from internal and external mishaps. Therefore, in this blog, we will discuss the various practices which would help you ensure the security of your cloud storage infrastructure. For a more technical sense of these practices, we will talk about one of the most popular cloud storage services – Amazon S3. However, the discussion around practices will be more generic to ensure that you can use them for any cloud storage vendor of your choice.

Comprehending Cloud Storage Security

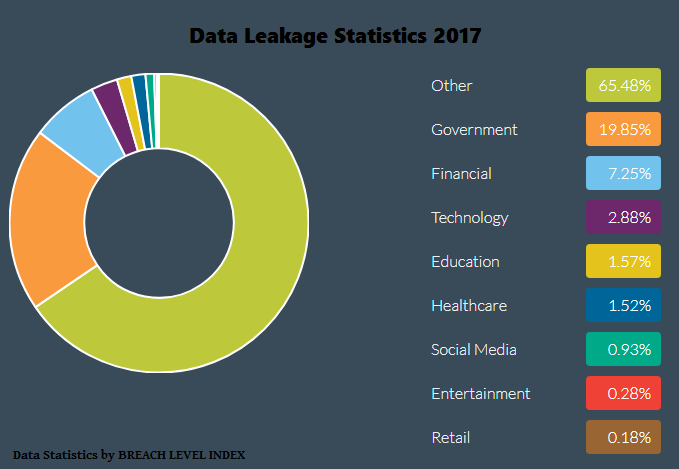

A recent study suggests that 93% of companies are concerned about the security risks associated with the cloud. The technical architects and admins directly in contact with cloud storage solutions often face security issues that they don’t fully comprehend. With an increasing number of ransomware and phishing attacks, the organization might often find themselves skeptical about migrating the data. So, how does one overcome these doubts and work towards a secure, business-boosting storage infrastructure? The answer, actually, is two-part:

- External Security – The security of the storage infrastructure itself is more of a vendor’s job. For instance, in the case of Amazon S3, AWS takes the onus of protecting the infrastructure that you trust your data with. Managing the cloud storage infrastructure makes sense for the vendor to carry out regular tests, audit, and verify the security firewalls of the cloud. Moreover, a lot of data compliance issues rightly fall under the vendor’s scope of responsibility so that you don’t have to worry about the administrative regulations for your data storage.

- Internal Security – Ensuring the security from the inside is where you, as a cloud storage service consumer, share the responsibility. Based on the services you’ve employed from your cloud storage vendor, you are expected to be fully aware of the sensitivity of your data, the compliance regulations of your organization, and the regulations mandatory as per the local authorities in your geography. The reason behind these responsibilities is the control you get as a consumer over the data that goes into the cloud storage. While the vendor would provide you with a range of security tools and services, it should be your final choice that would align with the sensitivity of your business data.

Thus, in this blog, we will discuss all the security services and configurations you can demand from your vendor to ensure that cloud storage is an ally against your competition and not another headache for your business.

Confirm Data Durability

The durability of infrastructure should be among the first pre-requisites for storing mission-critical data on the cloud. Redundant storage of data objects across multiple devices ensures reliable data protection. Amazon S3, for that matter, uses its PUT and PUTObject operations to copy the data objects at multiple facilities simultaneously. These facilities are then vigilantly monitored for any loss so that immediate repairs can be arranged. Some of the important practices to ensure data durability are:

- Versioning – Ensure that the data objects are versioned. This will allow recovering older data objects in the face of any internal or external application failure.

- Role-Based Access – Setting up individual accounts for each user with rightful liberties and restrictions discourages data leakage due to unnecessary access.

- Encryption – Server-side and in-transit data encryption modules provide an additional layer of protection, assuring that the data objects aren’t harmed during business operations. Amazon S3, for instance, uses Federal Information Processing Standard (FIPS) 140-2 validated cryptographic modules for such purpose.

- Machine Learning – Cloud Storage vendors also offer machine learning-based data protection modules that recognize the business sensitivity of data objects and alert the storage admins about unencrypted data, unnecessary access, and shared sensitive data objects. Amazon Macie is one such tool offered by AWS.

Making the Data Unreadable

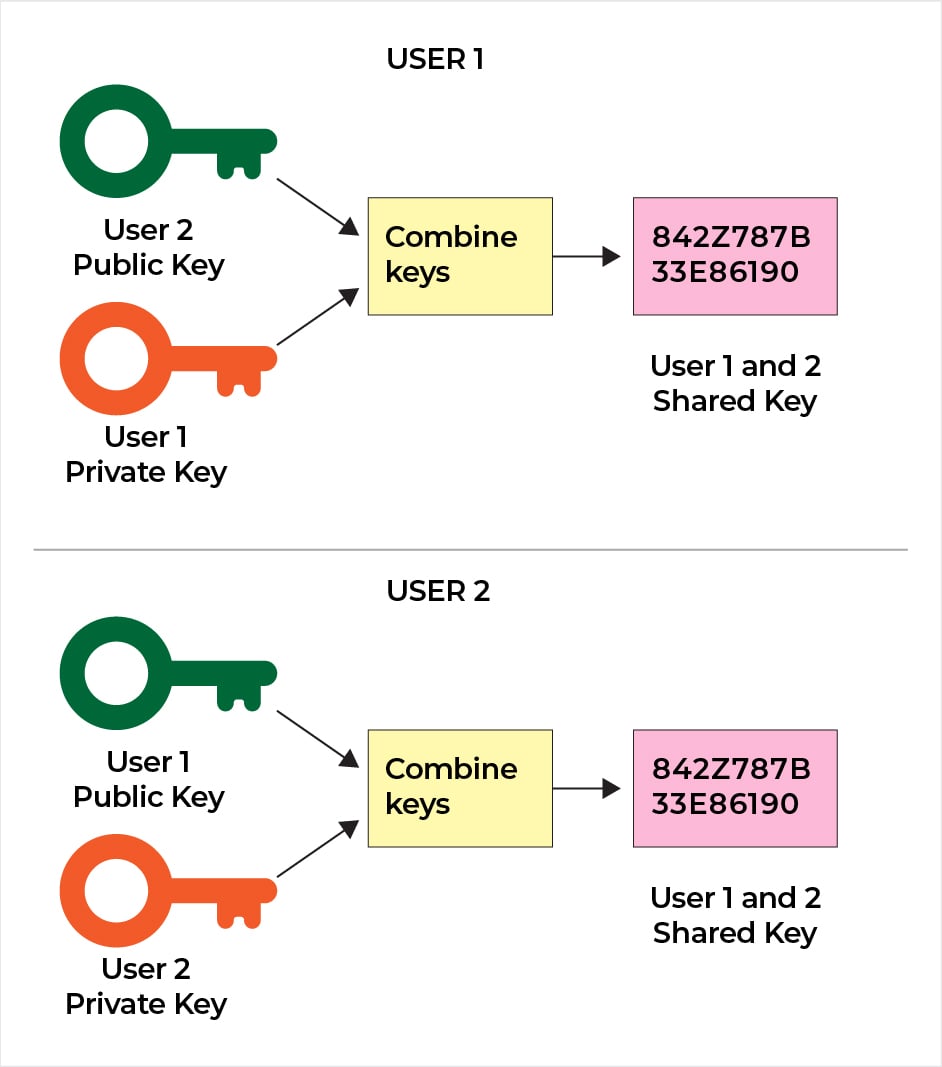

The in-transit data (going in and out of the cloud storage data centers) is vulnerable to network-based attacks. Measures need to be taken to ensure that this data, even if breached, is of no use to the attacker. The best method to achieve this is Data Encryption. Encryption modules like SSL/TLS are available to make sure that the data is unreadable without proper decryption keys. The cloud storage vendors provide server-side and client-side encryption strategies for the same purpose. In the case of Amazon S3, the objects can be encrypted when they are stored and decrypted back when they are downloaded. You, as a client, can manage the encryption keys and choose the suitable tools for your requirements.

Managing the Traffic Mischief

While the traffic on the public network is vulnerable to data thievery, the private network might often fall prey to internal mismanagement. To avoid both cases, most cloud vendors offer security sensitive APIs. These help the application operate with transport layer security while working with cloud storage data. TLS1.2 or above are usually recommended for modern data storage infrastructures, including the cloud. Talking about Amazon S3 in particular, AWS offers VPN and private link connections like Site-to-site and Direct connect to support safe connectivity for on-premise networks. To connect with other resources in the region, S3 uses a Virtual private cloud (VPC) endpoint that ensures that the requests are limited to and from the Amazon S3 bucket and VPC cloud.

SSL cipher suites provide the guidelines for secure network operations. A category of such cipher suites supports what is known as Perfect Forward Secrecy – which essentially makes sure that the encryption and decryption keys are regularly changed. As a client, you should look for cloud storage service providers that support such suites in order to ensure a secure network. Amazon S3, for this purpose, uses DHE (Diffie-Hellman Ephermal) or ECDHE (Elliptic Curve Diffie-Hellman Ephermal. Both are highly recommended suites supported by any application running on modern programming paradigms.

Ask Before Access

Admins handling cloud storage operations should follow strict access policies for resource access control. Both the resource and user-based access policies are offered by the cloud storage provider for the organization to choose from. It is imperative that you choose the right combination of these policies so that the permissions to your cloud storage infrastructure are tightly defined. A handy ally for this purpose in the case of Amazon S3 is an Access control list (ACL) where the access policies are defined for the S3 bucket, and you can easily choose the combo of your choice.

Watchful Monitoring

Maintain reliability, guaranteed availability, and untroubled performance are all results of a dark knight level monitoring. For cloud storage, you need a centralized monitoring dashboard of sorts that provides multi-point monitoring data. Check if your cloud vendor provides tools for:

- Automated single metric monitoring – Monitoring system that takes care of a specific metric and immediately flags any deviations from the expected results

- Request Trailing – Request triggered by any user or service needs to be trailed for details like request source IP, request time, etc., to log the actions taken on the cloud storage data. Server access requests are also logged for this purpose.

- Security Incident Logging – Fault tolerance can only be strengthened if any and every misconduct is logged with associated metrics and the resolutions assigned for the purpose. Such logs also help for automated recommendations for future conducts related to cloud storage.

Conclusion

There’ve been multiple episodes where companies serving high-profile customer-base faced humiliating attacks that went undetected over a considerable period of time. Such security gaps are not at all conducive to the customer experience we aim to serve. The security practices mentioned above will ensure that fragile corners of your cloud storage are all cemented and toughened up against the looming threats of ransomware and phishing hacks.